Authors:

(1) Abraham Owodunni, Intron Health, Masakhane, and this author contributed equally;

(2) Aditya Yadavalli, Karya, Masakhane, and this author contributed equally;

(3) Chris Emezuem, Mila Quebec AI Institute, Lanfrica, Masakhane, and this author contributed equally;

(4) Tobi Olatunji, Intron Health and Masakhane, and this author contributed equally;

(5) Clinton Mbataku, AI Saturdays Lagos.

Table of Links

4 What information does AccentFold capture?

5 Empirical study of AccentFold

6 Conclusion, Limitations, and References

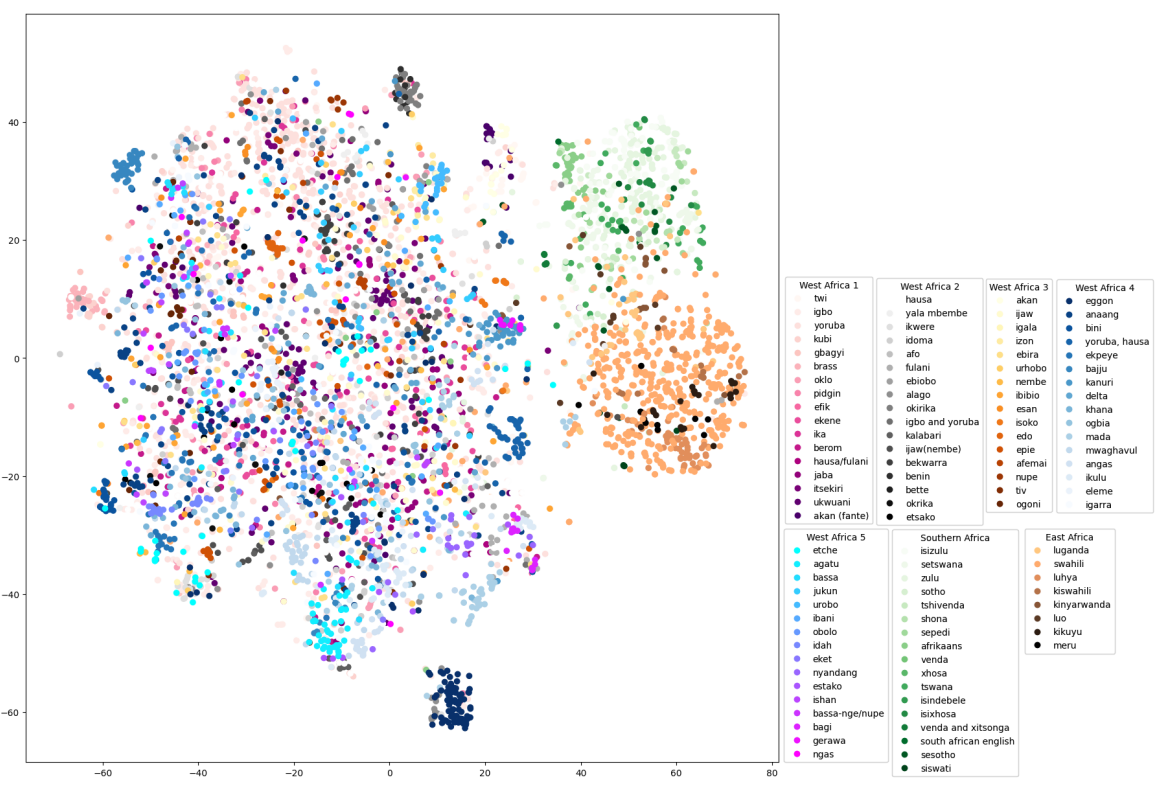

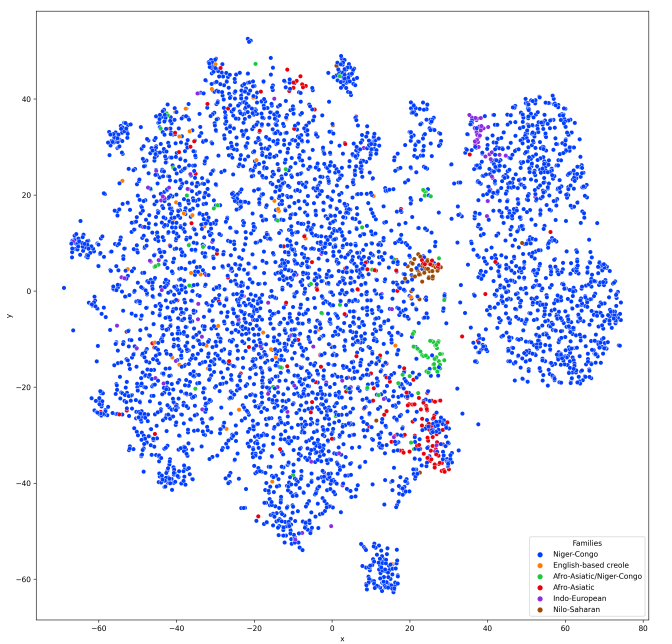

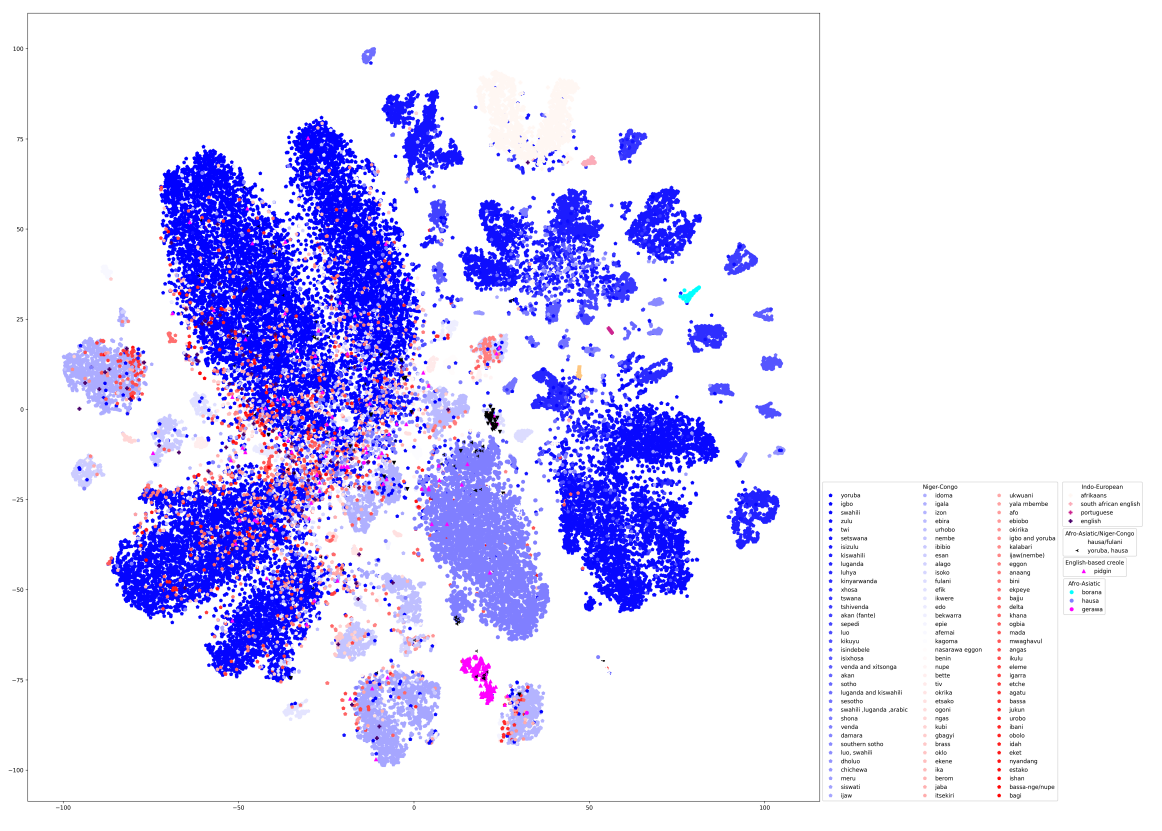

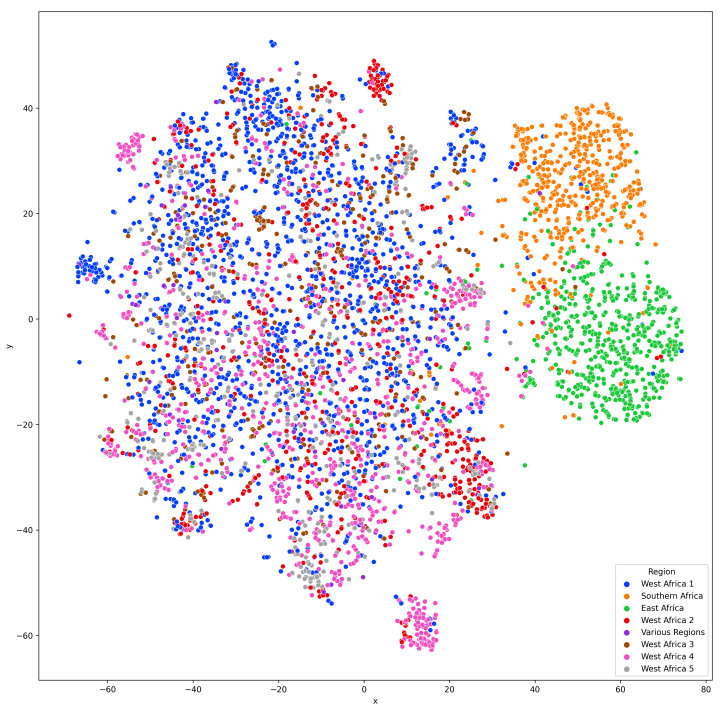

6 Conclusion

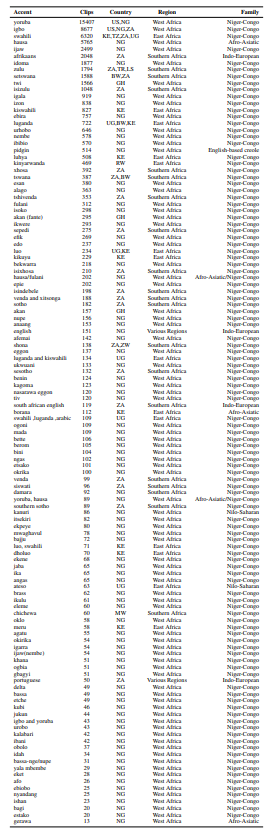

In conclusion, our research addresses the challenge of speech recognition for African accented speech by exploring the linguistic relationships of accent embeddings obtained through AccentFold. Our exploratory analysis of AccentFold provides insights into the spatial relationships between accents and reveals that accent embeddings group together based on geographic and language family similarities, capturing phonological, and morphological regularities based on language families. Furthermore, we reveal, in Section 4.1, two interesting relationships in some African accents that have been uncharacterized by the Ethnologue. Our experimental setup demonstrates the practicality of AccentFold as an accent subset selection method for adapting ASR models to targeted accents. With a WER improvement of 3.5%, AccentFold presents a promising approach for improving ASR performance on accented speech, particularly in the context of African accents, where data scarcity and budget constraints pose significant challenges. Our research paves the way for a deeper understanding of accent diversity and linguistic affiliations, thereby opening new avenues for leveraging linguistic knowledge in adapting ASR systems to target accents.

Limitations

One limitation of our study is the utilization of a single pre-trained model for fine-tuning in our experiments. While the chosen model demonstrated promising performance, this approach may the generalizability and robustness of our findings. Incorporating multiple pre-trained models with varying architectures and configurations would provide a more comprehensive evaluation of the ASR system’s performance.

Furthermore, our study primarily focuses on improving the ASR performance for English with a focus on African accents. Consequently, the findings and outcomes may not be directly transferable to languages outside of the African continent. The characteristics and phonetic variations inherent in non-African accents require tailored approaches to improve ASR systems in different linguistic contexts. Future studies should expand the scope to encompass a broader range of languages and accents to enhance the generalizability of our method beyond African languages.

t-SNE, a stochastic dimensionality reduction algorithm, is highly effective in preserving local structures and representing non-linear relationships in data (Roca et al., 2023). Hence it serves as a versatile and robust tool for visualizing highdimensional data and has been used extensively in myriad domains: for example in the medical domain it is used in visualizing and understanding single-cell sequencing data (Becht et al., 2019; Kobak and Berens, 2019). However, it should be noted that t-SNE is primarily used for data visualization purposes. Therefore, the insights discussed in Section 4 are solely derived from the exploratory analysis conducted using AccentFold and are not based on the inherent capabilities of t-SNE itself. The results obtained from t-SNE analysis should be interpreted with caution, as previous research has demonstrated (Roca et al., 2023; Becht et al., 2018).

Ethics Statement

We use AfriSpeech-200 dataset (Olatunji et al., 2023b) in this paper to run our experiments. This dataset is released under CC BY-NC-SA 4.0. As we use it only for research purpose or not for any commercial purpose, we do not go against the license. We do not foresee any harmful effects or usages of the methodology proposed or the models. We release all the artefacts created as part of this work under CC BY-NC-SA 4.0.

References

Alëna Aksënova, Zhehuai Chen, Chung-Cheng Chiu, Daan van Esch, Pavel Golik, Wei Han, Levi King, Bhuvana Ramabhadran, Andrew Rosenberg, Suzan Schwartz, and Gary Wang. 2022. Accented speech recognition: Benchmarking, pre-training, and diverse data. arXiv preprint arXiv: 2205.08014.

Dario Amodei, Sundaram Ananthanarayanan, Rishita Anubhai, Jin Bai, Eric Battenberg, Carl Case, Jared Casper, Bryan Catanzaro, Jingdong Chen, Mike Chrzanowski, Adam Coates, Gregory Frederick Diamos, Erich Elsen, Jesse Engel, Linxi (Jim) Fan, Christopher Fougner, Awni Y. Hannun, Billy Jun, Tony Xiao Han, Patrick LeGresley, Xiangang Li, Libby Lin, Sharan Narang, A. Ng, Sherjil Ozair, Ryan J. Prenger, Sheng Qian, Jonathan Raiman, Sanjeev Satheesh, David Seetapun, Shubho Sengupta, Anuroop Sriram, Chong-Jun Wang, Yi Wang, Zhiqian Wang, Bo Xiao, Yan Xie, Dani Yogatama, Junni Zhan, and Zhenyao Zhu. 2015. Deep speech 2 : End-to-end speech recognition in english and mandarin. ArXiv, abs/1512.02595.

Anonymous. 2023. Advancing african clinical speech recognition with generative and discriminative multitask supervision. Under review at unnamed conference.

Rosana Ardila, Megan Branson, Kelly Davis, Michael Henretty, M. Kohler, Josh Meyer, Reuben Morais, Lindsay Saunders, Francis M. Tyers, and Gregor Weber. 2019. Common voice: A massively-multilingual speech corpus. International Conference On Language Resources And Evaluation.

Alexei Baevski, Yuhao Zhou, Abdelrahman Mohamed, and Michael Auli. 2020. wav2vec 2.0: A framework for self-supervised learning of speech representations. In Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual.

Martijn Bartelds and Martijn Wieling. 2022. Quantifying language variation acoustically with few resources. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 3735–3741, Seattle, United States. Association for Computational Linguistics.

Etienne Becht, Leland McInnes, John Healy, CharlesAntoine Dutertre, Immanuel W H Kwok, Lai Guan Ng, Florent Ginhoux, and Evan W Newell. 2018. Dimensionality reduction for visualizing single-cell data using UMAP. Nature Biotechnology, 37(1):38– 44.

Etienne Becht, Leland McInnes, John Healy, CharlesAntoine Dutertre, Immanuel W H Kwok, Lai Guan Ng, Florent Ginhoux, and Evan W Newell. 2019. Dimensionality reduction for visualizing single-cell data using umap. Nature Biotechnology.

Lyle Campbell. 2008. Ethnologue: Languages of the world.

Maharajan Chellapriyadharshini, Anoop Toffy, Srinivasa Raghavan K. M., and V Ramasubramanian. 2018. Semi-supervised and active-learning scenarios: Efficient acoustic model refinement for a low resource indian language. In Interspeech 2018. ISCA.

Bernard Comrie. 1987. The world’s major languages.

Alexis Conneau, Alexei Baevski, Ronan Collobert, Abdel rahman Mohamed, and Michael Auli. 2020. Unsupervised cross-lingual representation learning for speech recognition. In Interspeech.

Marie-Catherine de Marneffe and Joakim Nivre. 2019. Dependency grammar. Annual Review of Linguistics, 5(1):197–218.

Alex DiChristofano, Henry Shuster, Shefali Chandra, and Neal Patwari. 2022. Performance disparities between accents in automatic speech recognition. arXiv preprint arXiv:2208.01157.

Siyuan Feng, Olya Kudina, Bence Mark Halpern, and Odette Scharenborg. 2021. Quantifying bias in automatic speech recognition. ArXiv, abs/2103.15122.

Nikhil Garg, Londa Schiebinger, Dan Jurafsky, and James Zou. 2018. Word embeddings quantify 100 years of gender and ethnic stereotypes. Proceedings of the National Academy of Sciences, 115(16):E3635– E3644.

Alex Graves, Santiago Fernández, Faustino Gomez, and Jürgen Schmidhuber. 2006. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, ICML ’06, page 369–376, New York, NY, USA. Association for Computing Machinery.

Tom Güldemann. 2018. Historical linguistics and genealogical language classification in africa. The languages and linguistics of Africa, pages 58–444.

Arthur Hinsvark, Natalie Delworth, Miguel Del Rio, Quinten McNamara, Joshua Dong, Ryan Westerman, Michelle Huang, Joseph Palakapilly, Jennifer Drexler, Ilya Pirkin, Nishchal Bhandari, and Miguel Jette. 2021. Accented speech recognition: A survey. arXiv preprint arXiv: 2104.10747.

Abhinav Jain, Minali Upreti, and Preethi Jyothi. 2018. Improved accented speech recognition using accent embeddings and multi-task learning. In Proc. Interspeech 2018, pages 2454–2458.

Shelly Jain, Aditya Yadavalli, Ganesh S Mirishkar, and Anil Kumar Vuppala. 2023. How do phonological properties affect bilingual automatic speech recognition? 2022 IEEE Spoken Language Technology Workshop (SLT), pages 763–770.

Kartik Khandelwal, Preethi Jyothi, Abhijeet Awasthi, and Sunita Sarawagi. 2020. Black-box adaptation of asr for accented speech. In Interspeech.

Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980.

Dmitry Kobak and Philipp Berens. 2019. The art of using t-sne for single-cell transcriptomics. Nature Communications.

Allison Koenecke, Andrew Nam, Emily Lake, Joe Nudell, Minnie Quartey, Zion Mengesha, Connor Toups, John R Rickford, Dan Jurafsky, and Sharad Goel. 2020. Racial disparities in automated speech recognition. Proceedings of the National Academy of Sciences, 117(14):7684–7689.

Suraj Kothawade, Anmol Mekala, D.Chandra Sekhara Hetha Havya, Mayank Kothyari, Rishabh Iyer, Ganesh Ramakrishnan, and Preethi Jyothi. 2023. DITTO: Data-efficient and fair targeted subset selection for ASR accent adaptation. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 5810–5822, Toronto, Canada. Association for Computational Linguistics.

Bo Li, Tara N. Sainath, Khe Chai Sim, Michiel Bacchiani, Eugene Weinstein, Patrick Nguyen, Zhifeng Chen, Yanghui Wu, and Kanishka Rao. 2018. Multidialect speech recognition with a single sequence-tosequence model. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 4749–4753.

Jialu Li, Vimal Manohar, Pooja Chitkara, Andros Tjandra, Michael Picheny, Frank Zhang, Xiaohui Zhang, and Yatharth Saraf. 2021a. Accent-robust automatic speech recognition using supervised and unsupervised wav2vec embeddings.

Jialu Li, Vimal Manohar, Pooja Chitkara, Andros Tjandra, Michael Picheny, Frank Zhang, Xiaohui Zhang, and Yatharth Saraf. 2021b. Accent-robust automatic speech recognition using supervised and unsupervised wav2vec embeddings. arXiv preprint arXiv: 2110.03520.

Tomas Mikolov, Ilya Sutskever, Kai Chen, G. Corrado, and J. Dean. 2013. Distributed representations of words and phrases and their compositionality. NIPS.

Antoine Nzeyimana and Andre Niyongabo Rubungo. 2022. Kinyabert: a morphology-aware kinyarwanda language model. Annual Meeting Of The Association For Computational Linguistics.

Tobi Olatunji, Tejumade Afonja, Bonaventure F. P. Dossou, Atnafu Lambebo Tonja, Chris Chinenye Emezue, Amina Mardiyyah Rufai, and Sahib Singh. 2023a. Afrinames: Most asr models "butcher" african names. arXiv preprint arXiv: 2306.00253.

Tobi Olatunji, Tejumade Afonja, Aditya Yadavalli, Chris Chinenye Emezue, Sahib Singh, Bonaventure F. P. Dossou, Joanne Osuchukwu, Salomey Osei, Atnafu Lambebo Tonja, Naome Etori, and Clinton Mbataku. 2023b. Afrispeech-200: Pan-african accented speech dataset for clinical and general domain asr.

Archiki Prasad and Preethi Jyothi. 2020. How accents confound: Probing for accent information in endto-end speech recognition systems. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 3739–3753, Online. Association for Computational Linguistics.

Carlos P. Roca, Oliver T. Burton, Julika Neumann, Samar Tareen, Carly E. Whyte, Vaclav Gergelits, Rafael V. Veiga, Stéphanie Humblet-Baron, and Adrian Liston. 2023. A cross entropy test allows quantitative statistical comparison of t-sne and umap representations. Cell Reports Methods, 3(1):100390.

H. Sakoe and S. Chiba. 1978. Dynamic programming algorithm optimization for spoken word recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing, 26(1):43–49.

Ramon Sanabria, Nikolay Bogoychev, Nina Markl, Andrea Carmantini, Ondrej Klejch, and Peter Bell. 2023. The Edinburgh International Accents of English Corpus: Towards the Democratization of English ASR. In ICASSP 2023.

Bidisha Sharma, Maulik C. Madhavi, and Haizhou Li. 2021. Leveraging acoustic and linguistic embeddings from pretrained speech and language models for intent classification. Ieee International Conference On Acoustics, Speech, And Signal Processing.

Tuende Szalay, Mostafa Shahin, Beena Ahmed, and Kirrie Ballard. 2022. Knowledge of accent differences can be used to predict speech recognition. In Proc. Interspeech 2022, pages 1372–1376.

Shubham Toshniwal, Tara N. Sainath, Ron J. Weiss, Bo Li, Pedro Moreno, Eugene Weinstein, and Kanishka Rao. 2018. Multilingual speech recognition with a single end-to-end model. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 4904–4908.

Laurens van der Maaten and Geoffrey E. Hinton. 2008. Visualizing data using t-sne. Journal of Machine Learning Research, 9:2579–2605.

Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Clement Delangue, Anthony Moi, Pierric Cistac, Tim Rault, Rémi Louf, Morgan Funtowicz, Joe Davison, Sam Shleifer, Patrick von Platen, Clara Ma, Yacine Jernite, Julien Plu, Canwen Xu, Teven Le Scao, Sylvain Gugger, Mariama Drame, Quentin Lhoest, and Alexander M. Rush. 2020. Huggingface’s transformers: State-of-the-art natural language processing.

Aditya Yadavalli, Ganesh Mirishkar, and Anil Kumar Vuppala. 2022a. Multi-Task End-to-End Model for Telugu Dialect and Speech Recognition. In Proc. Interspeech 2022, pages 1387–1391.

Aditya Yadavalli, Ganesh Sai Mirishkar, and Anil Vuppala. 2022b. Exploring the effect of dialect mismatched language models in Telugu automatic speech recognition. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Student Research Workshop, pages 292–301, Hybrid: Seattle, Washington + Online. Association for Computational Linguistics.

Jicheng Zhang, Yizhou Peng, Van Tung Pham, Haihua Xu, Hao Huang, and Chng Eng Siong. 2021. E2ebased multi-task learning approach to joint speech and accent recognition. In Interspeech.

This paper is available on arxiv under CC BY-SA 4.0 DEED license.